Cisco challenges Broadcom, Nvidia with 51.2Tbit switch ASIC of its own

Chip promises denser, greener networks – at least compared to the fire-breathing GPUs they connect

Cisco has piled on the AI networking bandwagon, joining Broadcom and Nvidia with a 51.2Tbit/sec switch it claims is capable of bringing together at least 32,000 GPUs.

The switch ASIC, codenamed the G200, was developed under Cisco's Silicon One portfolio, and is targeted at bandwidth-hungry web-scale networks as well as larger AI/ML compute clusters.

The chip itself offers twice the bandwidth of Cisco's older G100 ASIC, doubling the number of 112Gbit/sec serializer/deserializers (SerDes) from 256 to 512. This allows for up to 64x 800Gbit/sec, 128x 400Gbit/sec, or 256x 200Gbit/sec ports, depending on the application and port density desired.

Realistically, we expect the bulk of switches powered by Cisco's G200 to cap out at 400Gbit/sec – that's the maximum bandwidth supported by PCIe 5.0 NICs today, and there just aren't that many applications – other than aggregation – that can take advantage of 800Gbit/sec Ethernet in the first place.

If you're feeling a bit of déjà vu at this point, that might be because we've seen similar ASICs and switches from Broadcom and Nvidia, with their Tomahawk 5 and Spectrum-4 lines, respectively. Both switches boast 51.2Tbit/sec of bandwidth and are positioned as alternatives to InfiniBand networks for large GPU compute clusters.

In fact, the Cisco G200 promises many of the same AI/ML-centric features and capabilities promised by rival network vendors. All three promise things like advanced congestion management, packet-spraying techniques, and link failover.

These features are important, as in addition to a GPU being able to fully saturate a 400Gbit/sec link, the way workloads are distributed across these clusters makes them particularly sensitive to latency and congestion. If traffic gets backed up, GPUs can be left sitting idle, resulting in longer job completion times.

All three vendors will tell you that these features, combined with something like RDMA over Converged Ethernet (RoCE), can achieve extremely low-loss networking using standard Ethernet and, by extension, reduced completion times.

"There are obviously multiple 51.2Tbit/sec switches on the market. My view of the situation is that not all 51.2Tbit/sec switches are created equal. A lot of people make claims; few people deliver on those claims," Rakesh Chopra, who heads up marketing for Cisco's Silicon One line, told The Register.

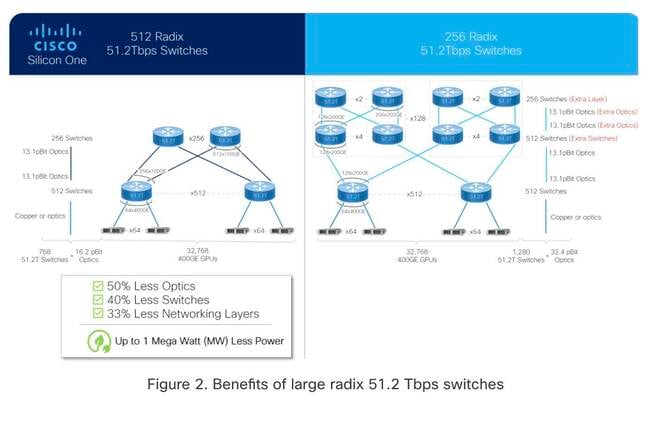

While Broadcom and Nvidia may have beaten Cisco to market, Chopra argued one of the G200's key differentiators is support for 512x radix configurations. Without getting into the nitty gritty, a larger radix means smaller, tighter switch fabrics.

- AMD seeks luck of the Irish with $135M investment for adaptive computing R&D

- Florida man (not that one) sold $100M-plus in counterfeit network gear

- Broadcom says Nvidia Spectrum-X's 'lossless Ethernet' isn't new

- Look mom, no InifiniBand: Nvidia's DGX GH200 glues 256 superchips with NVLink

Cisco claims this allows the G200 to scale to support clusters in excess of 32,000 GPUs – or around 4,000 nodes – using 40 percent fewer switches and half as many optics compared to a similar 256x radix switch. From what we can tell, this is a shot at Broadcom's Tomahawk 5, which highlights a 256x 200Gbit/sec radix, though it could also apply to Nvidia's Spectrum-4.

Cisco contends its Silicon One G200 ASIC allows for denser, more efficient networks, as depicted in this supplied graphic – Click for bigger

Chopra claimed Cisco is able to do this because Silicon One's technologies are "massively" more efficient than competing switches. How much more efficient, we don't know – Chopra wouldn't tell us how much power the G200 uses.

He did say, for a network topology capable of supporting 32,000 GPUs, using G200-based switches would translate into nearly a megawatt of power savings compared to competing products. While that may sound impressive, you probably wouldn't notice given a cluster of that size is apt to pull more than 40MW under load, and that's before you take into consideration datacenter cooling.

Even still, Chopra contended that any savings are worthwhile, especially for companies whose sustainability goals mandate offsetting the power they use.

However, in an email to The Register, Ram Velaga, SVP of Broadcom's core switching group, argued the example described by Cisco was unrealistic and would never be deployed in the real world.

"For example, they show a 100Gbit/sec link in the fabric transitioning to a 400Gbit/sec link to the GPUs, which implies that you could not use cut-through operation. You'd instead need to use a higher latency store-and-forward operation," Velaga wrote.

Velaga added that Tomahawk 5 can in fact support a 32,000 GPU cluster using two-tier network using the 768 switches – the same number as Cisco.

We also reached out to Nvidia for comment, and had not heard back at the time of publication.

Silicon One ASICs are currently in customers' hands for integration into end products. However, Chopra wouldn't commit to a timeframe when we can expect to see end products make their way into the datacenter. ®

Biting the hand that feeds IT

Biting the hand that feeds IT